We've been using CI/CD for ages in our company, but only recently we started to run against the limits of the free Microsoft-hosted build agent:

- Only one pipeline can run in parallel

- Build minutes are limited per month

- A pipeline can run for a maximum of 60 minutes

Not sure why we never hit any of them before (or maybe I wasn't personally impacted) so we needed a solution. Some thoughts that crossed our mind:

- Spread the execution, but that would limit usefulness. Who would want his builds to be scheduled ONLY at 3am...

- Buy Microsoft-hosted agents for 40 dollar per month per agent. Not cheap, and also it's a a fixed price: even if you aren't using 5 agents (which we need at peak times), you will still pay for it.

- Run our own build agents in Azure. We have some Visual Studio Enterprise Subscriptions, so these self-hosted pipelines would be free. Lot's of potential downsides to this one too, but it looked the most fun to try out 😉.

If you aren't interested in the backstory but just want to create your own Azure DevOps Build Agents like I (and Microsoft) do, head over to my GitHub repository and follow the technical set up steps.

The approach

I only wanted to use self-hosted agents if they could satisfy all my requirements:

- Have a self-hosted agent just like the Microsoft-hosted agents

- Automate the process as much as possible, and limit maintenance

- Keep the self-hosted agent up to date when Microsoft updated their hosted agents

- Automatically scale agents when usage increases or decreases to optimize on the "pay for what you use" idea

- Be cheaper on a monthly basis than buying 5 Microsoft-hosted agents

Azure virtual machine scale set agents

I know we need about 5 agents at peak time to limit the waiting time for our pipelines, some run short and some run longer but with 5 available agents it should be covered.

My first idea was to run one VM with good specs, install the DevOps agent on it 5 times with automated start and shutdown timers. This way it would boot up on the morning and shut down in the evening, minimizing spending when load was expected to be low anyways. Downside to this idea: if you need to run a pipeline outside of this time window, for whatever reason, you'll find that no agent is available.

I remembered that Azure has Virtual Machine Scale Sets exactly for purposes like this: scale up and down the amount of Virtual Machines depending on the load. Looking in that direction to see if I could tweak that idea that for my purposes, I learned about a fairly recent addition to Azure DevOps: Virtual Machine Scale Set Agents!

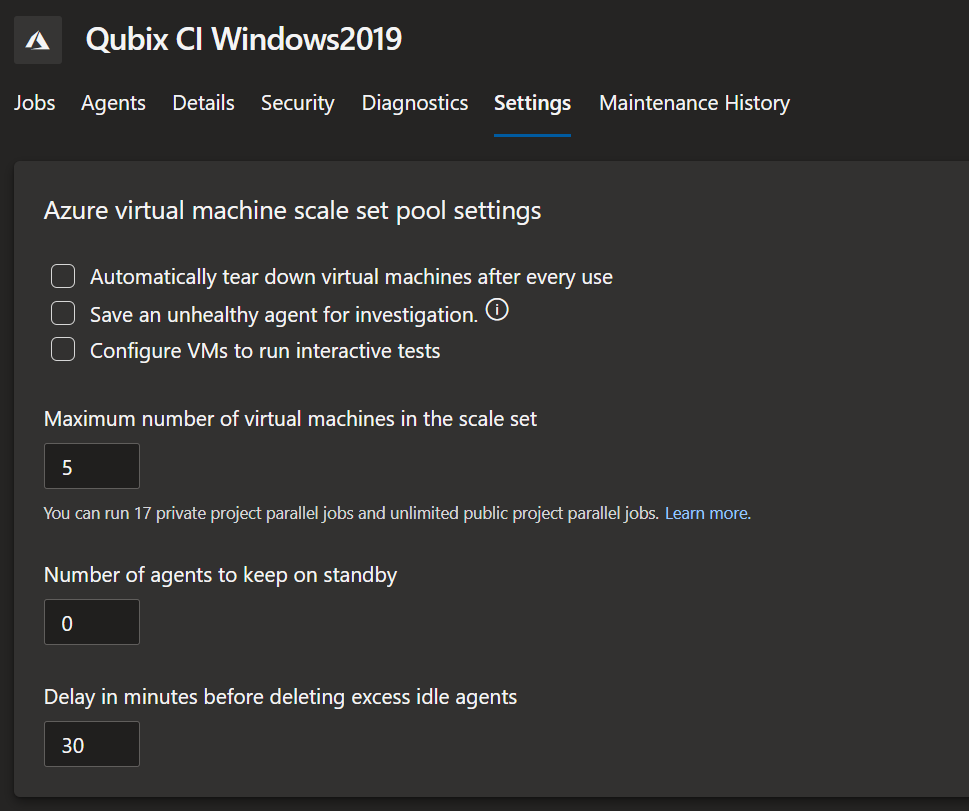

Virtual Machine Scale Set Agents are agents that run in an Azure Virtual Machine Scale Set, and this scale set is managed by Azure DevOps. Some of the management options:

- Number of agents to keep on standby: how many agents there should always be provisioned and waiting for work. This can be 0.

- Delay in minutes before deleting excess idle agents: how long DevOps will leave a VM running idle, without a pipeline job, before decommissioning the VM.

- Maximum number of virtual machines in the scale set: limit the number of VM's that Azure DevOps can spin up

This sounded exactly like what I needed, so I used docs.microsoft.com to create two scale sets: one for Windows-agents and one for Ubuntu-based agents. Afterwards, I registered the two scales sets as agent pools in my Azure DevOps organisation.

Scale set VM image

A scale set provisions new VM's based on a specific image, and while it can be a standard Windows Server 2019 or Ubuntu 20.04 image from the store, I wanted my build agents to be as close to Microsoft-hosted agents as possible. Luckily, Microsoft has open-sourced the scripts they use to provision build agents on GitHub.

They use Packer by HashiCorp to automatically build their machine images. Packer runs through a set of instructions, executes them in order and in the end you have a fully configured Virtual Machine.

In essence, this is the process Packer follows:

- Provision a VM in Azure where Packer will be executed

- Provision a VM in Azure where Packer will install all the prerequisites

- Sysprep the VM at the end of the process

- Keep the disk (as a .vhd) file

- Destroy both the orchestrator VM as well as the full provisioned VM

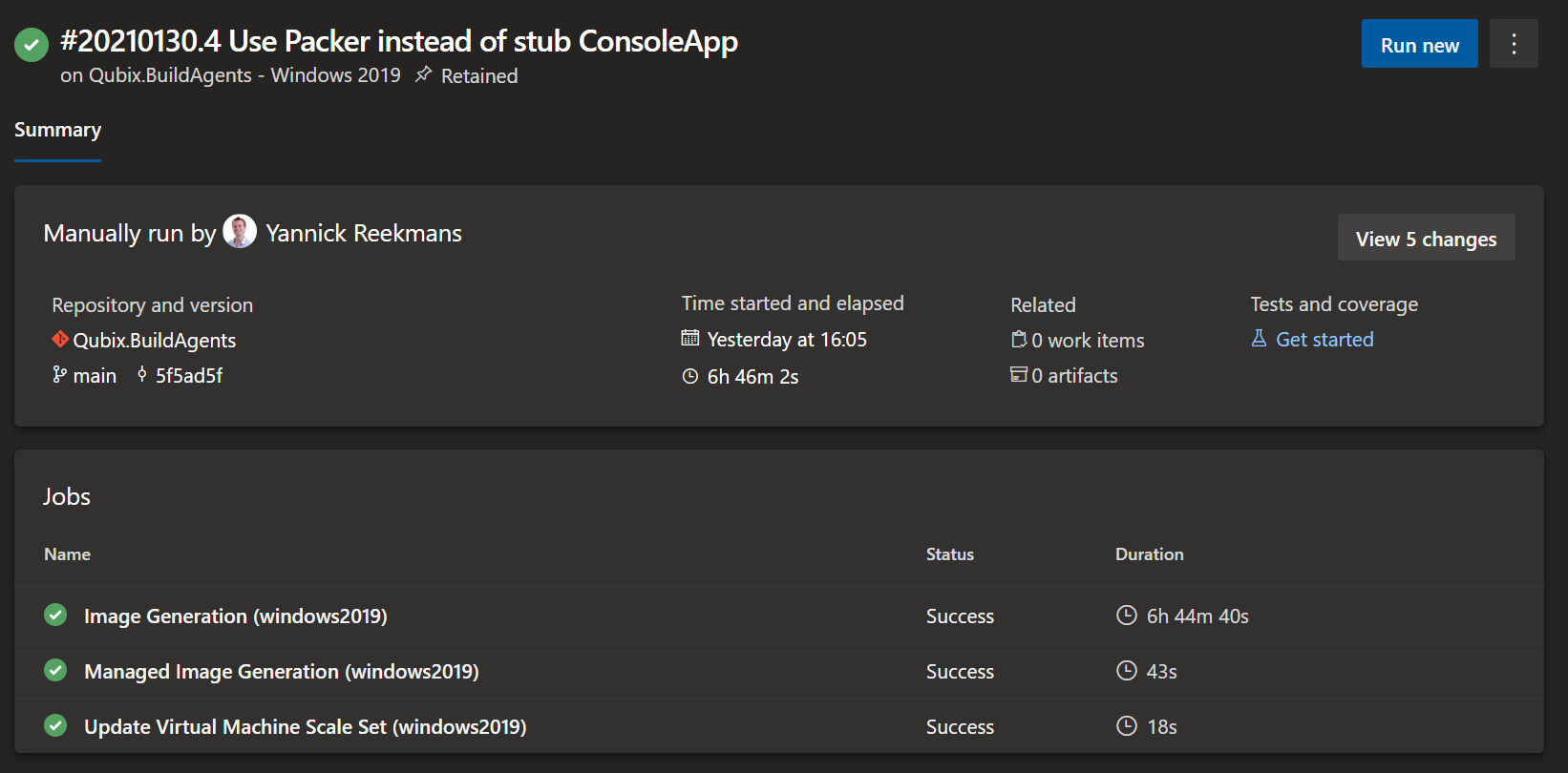

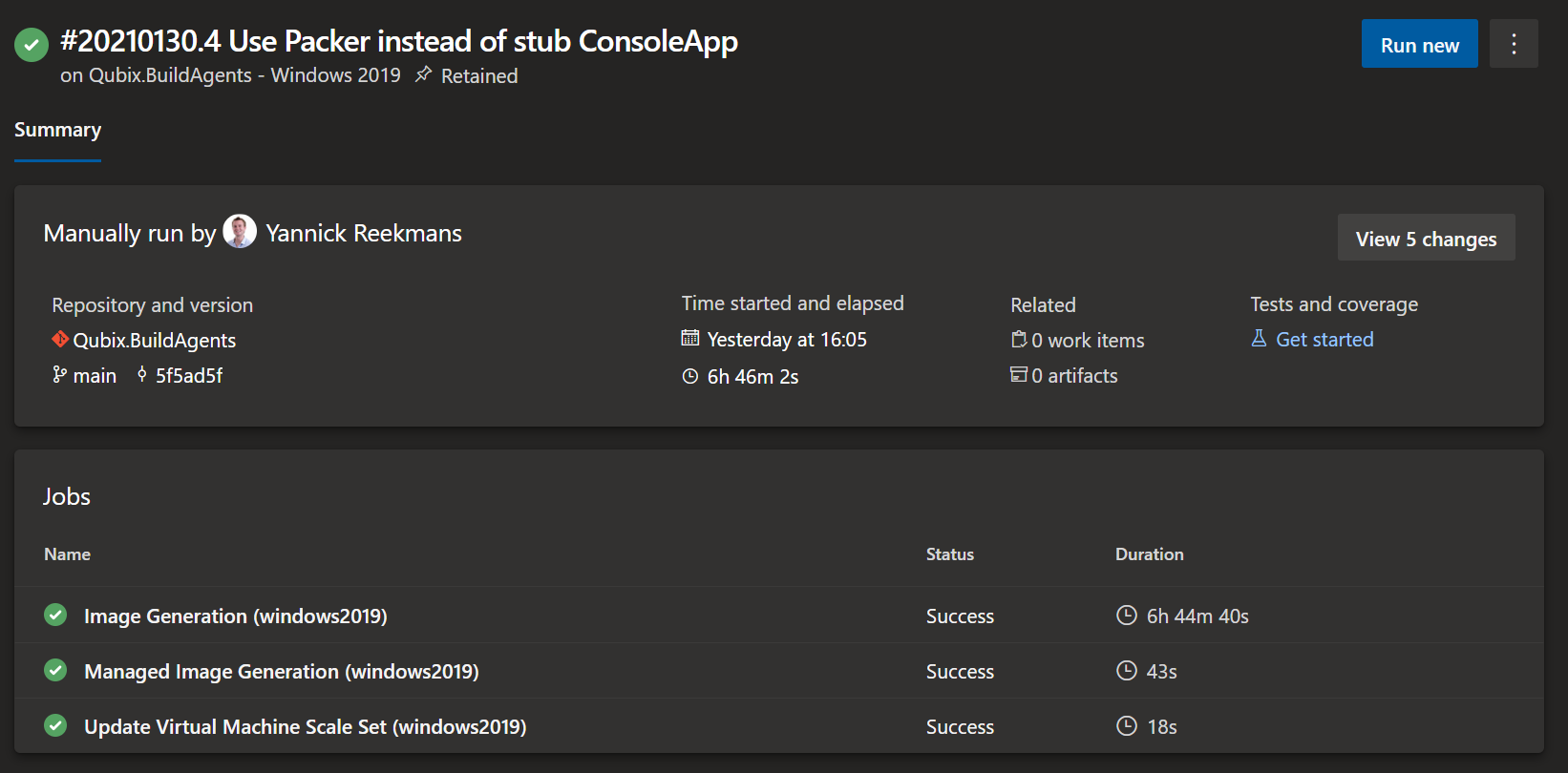

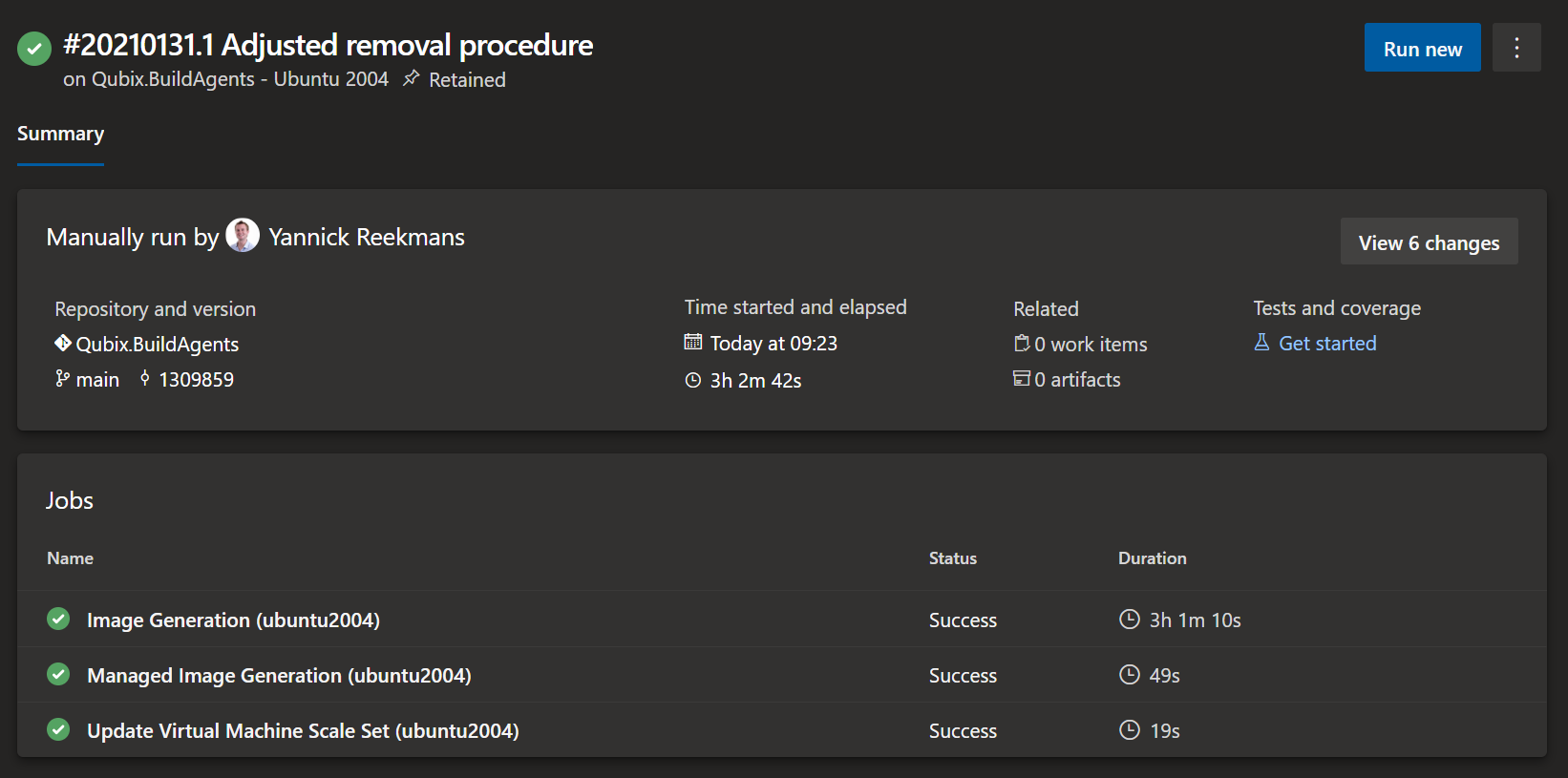

I tweaked this process in my own Azure DevOps pipeline to:

- Git clone the actions/virtual-environments repository

- Execute Packer with resources from the newly cloned repository

- Turn the resulting .vhd into an Azure Managed Image

- Update the Virtual Machine Scale Set to use the new Azure Managed Image for the next provisioning of a VM

Success, (almost) all my prerequisites are fulfilled:

✔ Have a self-hosted agent just like the Microsoft-hosted agents

✔ Automate the process as much as possible, and limit maintenance

✔ Keep the self-hosted agent up to date when Microsoft updated their hosted agents

✔ Automatically scale agents when usage increases or decreases to optimize on the "pay for what you use" idea

❔ Be cheaper on a monthly basis than buying 5 Microsoft-hosted agents => don't know yet, need to switch over more pipelines to use the new agent pool.

The gotcha's

Pipeline runtime

Packer takes a good amount of time to generate the sysprepped, fully prepared image. A Windows Server 2019 image that is exactly like the Microsoft-hosted agent, takes about 7 hours to provision:

The same process to generate an Ubuntu 20.04 image takes about 3 hours:

Luckily, the process is fully automated and you don't necessarily need to keep the images up to date every day. I run this pipeline once a month, so every month we get an adjusted base image for our build agents.

Chicken or the egg problem

We saw that Packer takes longer than 1 hour to generate the base image, and on the free tier of Microsoft-hosted agents your pipeline can only run for 1 hour. It's a chicken-or-the-egg problem: we need the result of this pipeline to run this pipeline...

The initial run of my pipeline was done on a paid Microsoft-hosted agent, which we were testing in parallel. After my pipeline succeeded I switched it over to use my freshly generated scale set agent pool for subsequent runs.

As an alternative you could run the Packer execution on your local computer overnight to generate the first VM image, after which you can use it to build your scale set agent pool.

Failing builds

These images contain an enormous amount of installed tools, and some get installed directly from the internet in their latest version. This means that without any new commits, a build that succeeded yesterday can fail today.

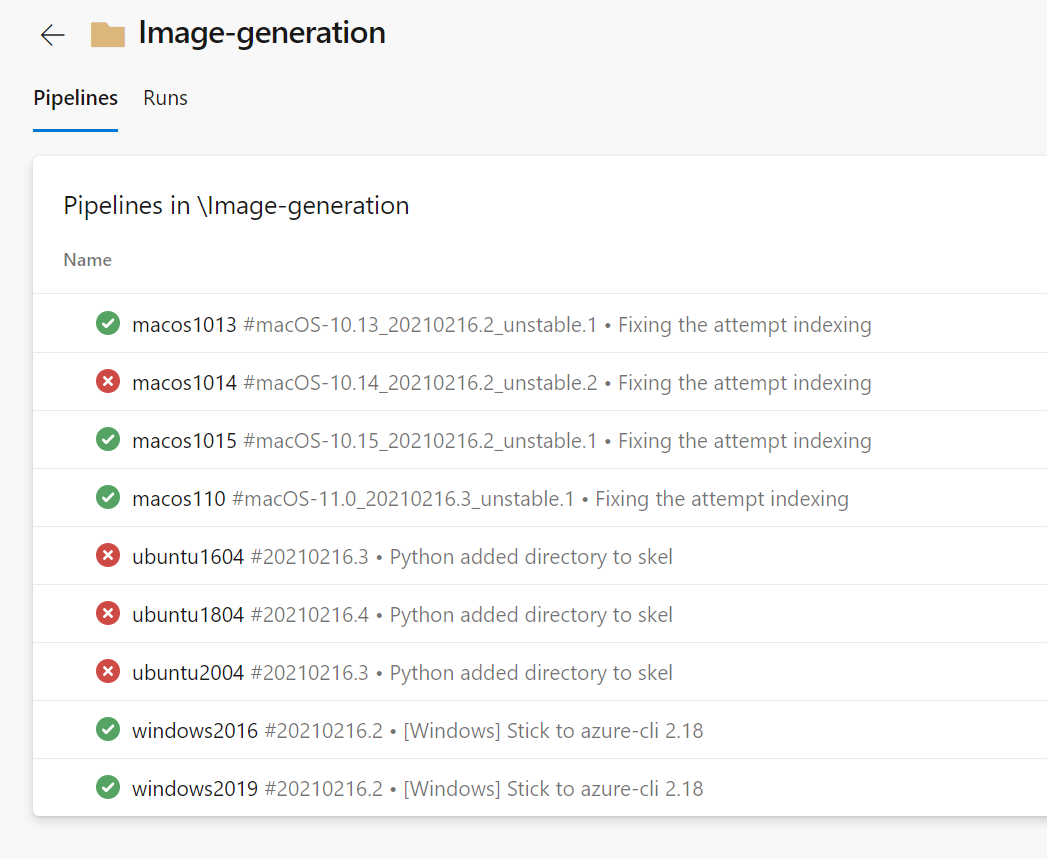

Easiest way to determine if the problem lies in your pipeline or stems form the Packer scripts, is to go check the Microsoft pipelines that run the Packer execution on every commit to their repository.

The result

I published my build pipelines and the setup instructions on GitHub: YannickRe/azuredevops-buildagents: Generate self-hosted build agents for Azure DevOps, just like Microsoft does. (github.com)

It requires some configuration on Azure and on Azure DevOps to get it right, but the result is worth it hopefully!